I have written step by step, what needs to be done to accomplish this task in a BluePrint. For those new to that term, BluePrints are Ubuntu's way of writing software specs in the open, where everyone can read and comment on them (don't you just love open!). So, if you're ready to start contributing, click that blueprint link, decide whether you want to hack on this tool, ping me and let's start working on it! For any questions or discussions, please drop by on Freenode IRC #ubuntu-cloud (if you're new to IRC, use this web UI instead http://cloud.ubuntu.com/community/irc-chat/). A great time to discuss this, would be today (Wed) 6pm-UTC during the weekly cloud community meeting. If you miss the meeting no problemo, just talk in the channel anytime, most people are there all the time anyway. I'm already hyper excited. Any questions or feedback? let me know in comments below

Wednesday, January 19, 2011

Hack on Ubuntu Cloud Utils

I have written step by step, what needs to be done to accomplish this task in a BluePrint. For those new to that term, BluePrints are Ubuntu's way of writing software specs in the open, where everyone can read and comment on them (don't you just love open!). So, if you're ready to start contributing, click that blueprint link, decide whether you want to hack on this tool, ping me and let's start working on it! For any questions or discussions, please drop by on Freenode IRC #ubuntu-cloud (if you're new to IRC, use this web UI instead http://cloud.ubuntu.com/community/irc-chat/). A great time to discuss this, would be today (Wed) 6pm-UTC during the weekly cloud community meeting. If you miss the meeting no problemo, just talk in the channel anytime, most people are there all the time anyway. I'm already hyper excited. Any questions or feedback? let me know in comments below

Monday, January 17, 2011

Help Ubuntu Server, Voice your Opinion

Hello Ubuntu Cloud and Server communities. Now is your chance to voice your opinion about Ubuntu server, as well as help make it better! The Ubuntu marketing team would like more insight into why your organization has chosen to deploy Ubuntu on the server/cloud sides (or why they did not) as well as your opinion as to how Ubuntu server can improve. If you're a pro-IT person deploying or supporting Ubuntu server or cloud in any capacity, please help make Ubuntu better by helping us better understand your needs. Here's a survey designed for just that

Thanks!

Thursday, January 13, 2011

Ubuntu Server news storm

- Virtualization and LXC improvements

- Higher quality Java packaging

- Automated Server ISO testing, ensuring rock solid releases

- Even more Upstart polish

- Improving Ubuntu server's Cluster stack

- Datacenter power efficiency with powernap

- UEC and EC2 image improvements, pv-grub support

- Cloud "desktop" images! wow!

- HUGE project for cloud/data-center automatic deployment, management, monitoring, debugging. IMO this takes Ubuntu server way above anything out there. The technology is all about picking and integrating the best of breed open-source server technologies. While you could always do that work yourself, having a tightly integrated stack that is known to work, is what makes Ubuntu server an easy and fun platform to build upon

Exciting times indeed, check out the full interview at

Video link: http://www.youtube.com/watch?v=CWNjzwci5e8

Labels:

canonical,

cloud,

datacenter,

deployment,

power,

server,

ubuntu,

ubuntu-planet

Upgrade Lucid to Maverick on EC2

Let's download the list of ec2 images, get a list lucid ebs i386 amis

ec2-describe-images --all > /tmp/ec2-images $ grep '099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386' /tmp/ec2-images IMAGE ami-714ba518 099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386-server-20100427.1 099720109477 available public i386machine aki-754aa41c ebs paravirtual IMAGE ami-1234de7b 099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386-server-20100827 099720109477 available public i386 machine aki-5037dd39 ebs paravirtual IMAGE ami-6c06f305 099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386-server-20100923 099720109477 available public i386 machine aki-3204f15b ebs paravirtual IMAGE ami-480df921 099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386-server-20101020 099720109477 available public i386 machine aki-6603f70f ebs paravirtual IMAGE ami-a2f405cb 099720109477/ebs/ubuntu-images/ubuntu-lucid-10.04-i386-server-20101228 099720109477 available public i386 machine aki-3af50453 ebs paravirtual

Start our instance, if you were already running a lucid server, of course you wouldn't have to do that, but for our demo, we'll create a lucid instance to upgrade it to maverick

$ ec2-run-instances ami-a2f405cb --instance-type t1.micro -k default

RESERVATION r-33271c59 553172479171 default

INSTANCE i-05cd0169 ami-a2f405cb pending default 0 t1.micro 2011-01-12T20:42:12+0000 us-east-1d aki-3af50453 monitoring-disabled ebs paravirtual

$ ec2-describe-instances | awk '-F\t' '$1 == "INSTANCE" { print $4 }'

ec2-67-202-26-253.compute-1.amazonaws.com

Let's ssh into our to-be-upgraded server, install latest lucid upgrades

ssh ubuntu@ec2-67-202-26-253.compute-1.amazonaws.com screen -S upgrade sudo apt-get update sudo apt-get dist-upgrade

Normally, you wouldn't be able to update a LTS release, except to another LTS release. We'll change that to upgrade to maverick, and start upgrading

$ sudo sed -i.bak -e 's@lts$@normal@' /etc/update-manager/release-upgrades $ do-release-upgrade Checking for a new ubuntu release Done Upgrade tool signature Done Upgrade tool Done downloading extracting 'maverick.tar.gz' authenticate 'maverick.tar.gz' against 'maverick.tar.gz.gpg' tar: Removing leading `/' from member names Reading cache Checking package manager Reading package lists... Done Building dependency tree Reading state information... Done Building data structures... Done Reading package lists... Done Building dependency tree Reading state information... Done Building data structures... Done Updating repository information WARNING: Failed to read mirror file 100% [Working] Checking package manager Reading package lists... Done Building dependency tree Reading state information... Done Building data structures... Done Calculating the changes Calculating the changes Do you want to start the upgrade? 2 installed packages are no longer supported by Canonical. You can still get support from the community. 3 packages are going to be removed. 29 new packages are going to be installed. 274 packages are going to be upgraded. You have to download a total of 124M. This download will take about 15 minutes with a 1Mbit DSL connection and about 4 hours with a 56k modem. Fetching and installing the upgrade can take several hours. Once the download has finished, the process cannot be cancelled. Continue [yN] Details [d]

Agree to any prompts while upgrading, till it actually finishes. Let's not reboot the server, instead we power it off

System upgrade is complete. Restart required To finish the upgrade, a restart is required. If you select 'y' the system will be restarted. Continue [yN]n $ sudo poweroff

Now what happened is the server has actually been updated from lucid to maverick. However, Amazon would still boot the server using a lucid kernel. That's because for ec2 the kernel is stored outside of the ebs image itself. However, recently amazon added the ability to use pv-grub for booting, that's basically a way to chainload the kernel from inside the ebs image and boot that. That actually makes the cloud server behave just like a bare metal server, which is always a good thing :) To get the aki ID for the pv-grub kernel, we simply grep for the latest maverick images, since those already have pv-grub attached to them

$ grep '099720109477/ebs/ubuntu-images/ubuntu-maverick-10.10-i386' /tmp/ec2-images IMAGE ami-508c7839 099720109477/ebs/ubuntu-images/ubuntu-maverick-10.10-i386-server-20101007.1 099720109477 available public i386machine aki-407d9529 ebs paravirtual IMAGE ami-ccf405a5 099720109477/ebs/ubuntu-images/ubuntu-maverick-10.10-i386-server-20101225 099720109477 available public i386machine aki-407d9529 ebs paravirtual

Now let's switch the upgraded image to use the pv-grub kernel

ec2-modify-instance-attribute i-05cd0169 --kernel aki-407d9529 kernel i-05cd0169 aki-407d9529 $ ec2-start-instances i-05cd0169 INSTANCE i-05cd0169 stopped pending $ ec2-describe-instances RESERVATION r-33271c59 553172479171 default INSTANCE i-05cd0169 ami-a2f405cb ec2-50-16-133-253.compute-1.amazonaws.com ip-10-112-53-225.ec2.internal running default 0 t1.micro 2011-01-12T21:27:51+0000 us-east-1d aki-407d9529 monitoring-disabled 50.16.133.253 10.112.53.225 ebs paravirtual BLOCKDEVICE /dev/sda1 vol-dc7bc9b4 2011-01-12T20:42:22.000Z

We're done, let's ssh into the new instance, and make sure it's running maverick kernel

ssh ubuntu@ec2-50-16-133-253.compute-1.amazonaws.com $ uname -r 2.6.35-24-virtual

voila, mission accomplished. Don't forget to terminate your instance

$ ec2-terminate-instances i-05cd0169 INSTANCE i-05cd0169 running shutting-down

Tuesday, January 11, 2011

Jono'ed at Dallas sprint

Thursday, January 6, 2011

Cloud LAMP Stack in 60 seconds

If you'd like to download the cloud-init file used, as well as other similar files, visit the awstrial project

Labels:

canonical,

cloud,

cloud-init,

ec2,

LAMP,

ubuntu,

ubuntu-planet,

wordpress

Monday, January 3, 2011

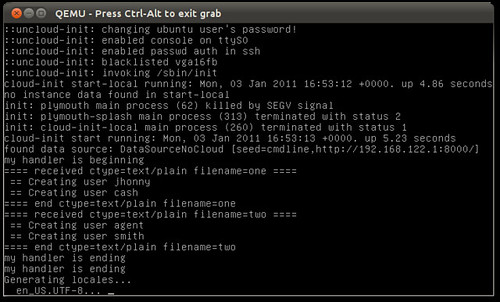

Advanced cloud-init custom handlers

We need the "write-mime-multipart" script from cloud-init. I would not recommend installing cloud-init on your own machine, since cloud-init is designed to be run on cloud instances (not physical nodes). If you do install it on your own machine, it blocks the boot process waiting for the cloud userdata service to appear (which it never does), so you end up waiting a lot! To get the script, we just check out the code directly

bzr branch lp:cloud-init

You'll find that script in the tools/ directory. Assuming you could run cloud-init on local KVM, we now need to replace the user-data file, which in the previous article was written using cloud-config syntax, with a new file. The new user-data file is a multipart file composed of custom python code and data you want your python code to chew on! Here is how you create the file

./write-mime-multipart --output user-data part-handler.txt one:text/plain two:text/plain

Let's take a look at the contents of those files. Files "one" and "two" are the data, while "part-handler.txt" is the python code adapted from cloud-init. In our case, I chose to let our part handler be a "user-creation" provider, i.e. you supply a list of user names in the data file, the code loops over them creating them. Simple enough for an example I hope. Let's check out the data files

$ cat one jhonny cash $ cat two agent smith

These two files hold the 4 users to be created! I split them into 2 files, just to demo you could have multiple input files. Now let's check out the code living in part-handler.txt

#part-handler

# vi: syntax=python ts=4

def list_types():

# return a list of mime-types that are handled by this module

return(["text/plain", "text/go-cubs-go"])

def handle_part(data,ctype,filename,payload):

# data: the cloudinit object

# ctype: '__begin__', '__end__', or the specific mime-type of the part

# filename: the filename for the part, or dynamically generated part if

# no filename is given attribute is present

# payload: the content of the part (empty for begin or end)

if ctype == "__begin__":

print "my handler is beginning"

return

if ctype == "__end__":

print "my handler is ending"

return

print "==== received ctype=%s filename=%s ====" % (ctype,filename)

import os

for user in payload.splitlines():

print " == Creating user %s" % (user)

os.system('sudo useradd -p ubuntu -m %s' % (user) )

print "==== end ctype=%s filename=%s" % (ctype, filename)

Most of the code is just boiler plate. The code needs to implement two functions "list_types()" that returns a list of content types that this code can handle. In our case, we return "text/plain" and "text/go-cubs-go". Note that when we ran "write-mime-multipart" we used the mime type text/plain, and that is the reason cloud-init would invoke this code to handle it. Because the code advertises it can handle text/plain types. The second function the code needs to implement is handle_part. This is called at the very beginning and very end for initialization and tear-down. It is also called on each input file (2 times in our case). A sample run looks like

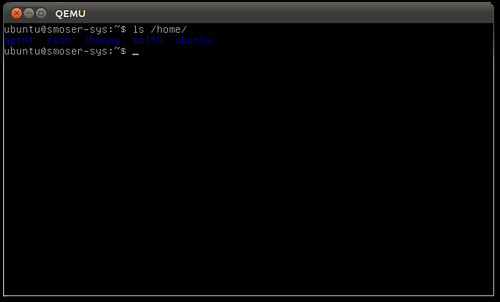

and sure enough, the 4 users were created

That should be everything you need to know about writing custom mime type handlers as extensions to cloud-init. Indeed that is some pretty amazing stuff! Any questions or comments, leave a comment

Labels:

canonical,

cloud,

cloud-init,

custom,

handler,

ubuntu,

ubuntu-planet

Subscribe to:

Posts (Atom)