So you wanted to play with Ubuntu Enterprise Cloud (UEC), but didn't have a couple of machines to play with ? Want to start a UEC instance right now, no problem. You can use an Amazon EC2 server instance as your base server to install and run UEC on! Of course the EC2 instance is itself a virtual machine, thus running a VM inside that would require nested virtualization which AFAIK wouldn't work over EC2. The trick here is that we switch UEC's hypervisor temporarily to be qemu. Of course this won't win any performance competitions, in fact it'd be quite slow in production, but for playing, it fits the bill just fine.

If you're thinking doing all that is gonna be complex, you have a point, however it won't! In fact it'll be very easy due to efforts of Ubuntu's always awesome

Scott Moser. Scott has written a script that automates all needed steps. But wait, it gets better, we're not even going to run this script ourselves, we're passing it as a parameter to the EC2 instance invocation and due to Ubuntu's

cloud-init technology, that script is going to be run upon instance boot-up, doing its work automagically. Now let's get started

On your local machine, let's install bzr and get the needed script

cd /tmp

sudo apt-get install bzr -y

bzr branch lp:~smoser/+junk/uec-on-ec2

cd uec-on-ec2/

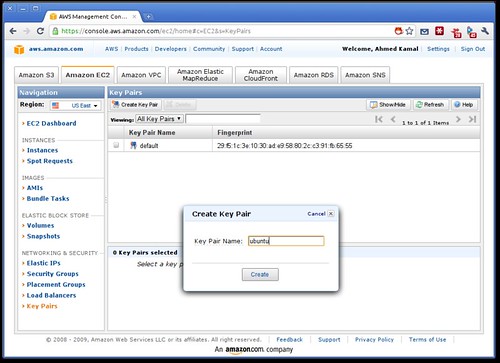

The file "maverick-commands.txt" contains the script needed to turn the generic Ubuntu image on ec2 into a fully operational single-node UEC install. If you don't have "ssh keys" (seriously?) generate some

ssh-keygen -t rsa

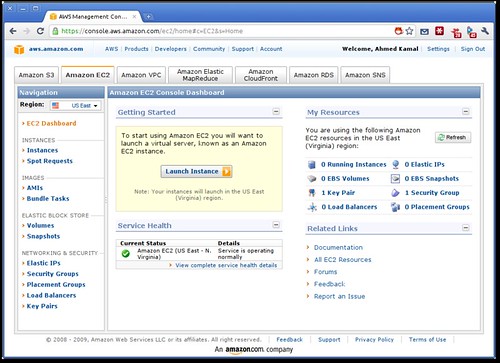

Now let's do a neat trick to import the keys into EC2, marking them with the name "default"

for r in us-east-1 us-west-1 ap-southeast-1 eu-west-1; do ec2-import-keypair --region $r default --public-key-file ~/.ssh/id_rsa.pub ; done

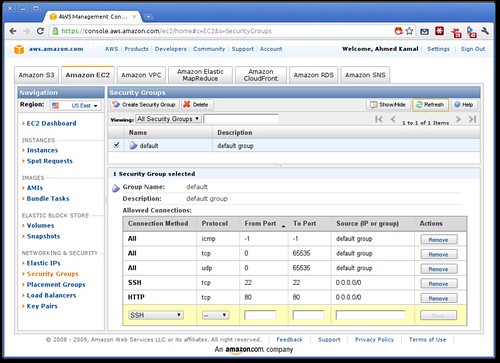

Let's open a few needed ports in EC2's default security group

for port in 22 80 8443 8773; do ec2-authorize default -p $port ; done

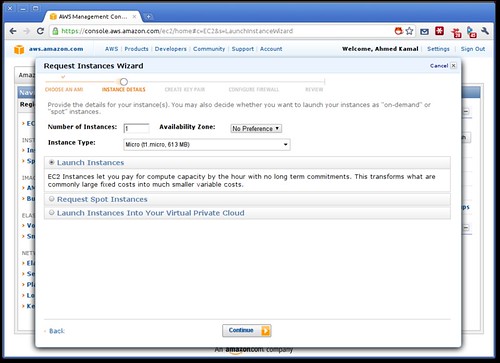

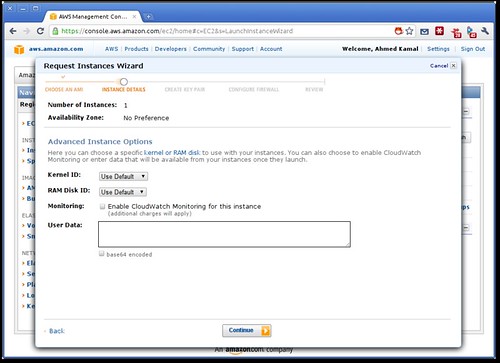

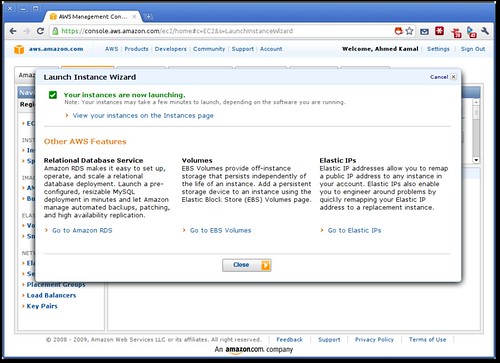

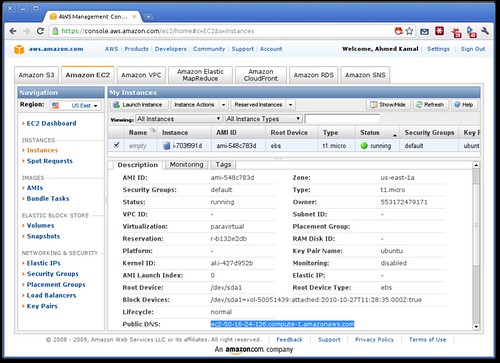

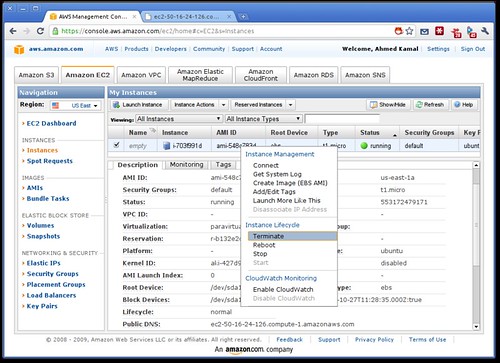

Very well .. We now launch our EC2 instance, passing in the "maverick commands" file. What happens is, the server instance is created, booted, Ubuntu's cloud-init reads up the maverick commands script we passed to it, and executes it, it starts downloading, installing and configuring UEC in the background while you ssh into your new EC2 instance

ec2-run-instances ami-688c7801 --instance-type m1.large -k default --user-data-file=maverick-commands.txt

Give it a minute to boot, then try to ssh in

ec2-describe-instances

ssh ubuntu@ec2-a-b-c-d.compute-1.amazonaws.com

Replace the DNS name for the EC2 instance, with the proper one you get from ec2-describe-instances. Once logged into the EC2 instance, I would start byobu and tail the log file to monitor progress. The whole thing takes less than 5 minutes

byobu

tailf uec-setup.log

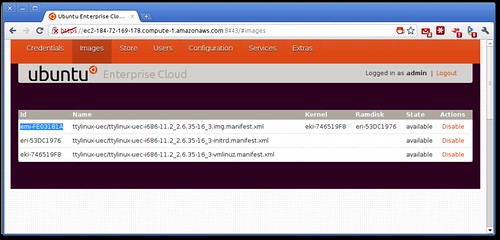

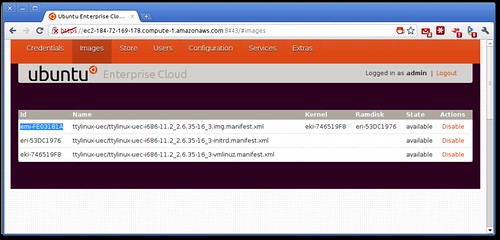

The script once finished configuring UEC, actually downloads a tiny linux distro and registers its image in UEC, so that you can start your own instances! You know the script has finished when you see a line that looks like

emi="emi-FDC21818"; eri="eri-53721963"; eki="eki-740D19EC";

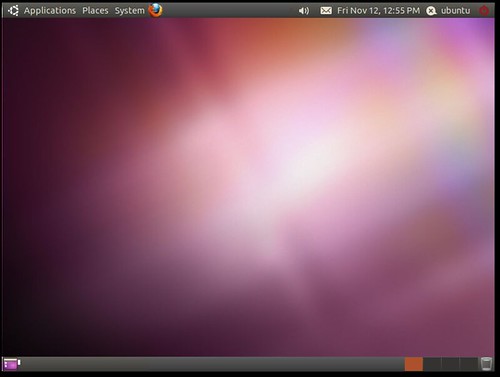

UEC is now up and running, let's check the web interface! You login with the default credentials admin/admin

Let's navigate to the Images tab, to get the EMI ID (Equivalent of an AMI ID)

Is that cool or what, Hell Yes! Now let's start our own VM inside UEC that's inside EC2. Remeber to replace emi-FE03181A with the EMI ID you got from the web interface

euca-run-instances --key mykey --addressing private emi-FE03181A

You can use "euca-describe-instances" to get the new instance internal IP address and ssh to that

ubuntu@domU-12-31-38-01-85-91:~$ ssh -i euca/mykey.pem ubuntu@172.19.1.2

The authenticity of host '172.19.1.2 (172.19.1.2)' can't be established.

RSA key fingerprint is db:9b:47:a4:06:81:26:d7:cf:38:a4:0e:6c:05:54:0d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.19.1.2' (RSA) to the list of known hosts.

Chop wood, carry water.

$ uname -r

2.6.35-16-virtual

$ df -h

Filesystem Size Used Available Use% Mounted on

/dev/sda1 23.2M 14.1M 7.9M 64% /

tmpfs 24.0K 0 24.0K 0% /dev/shm

et voila, you're ssh'ed into a ttylinux instance running inside qemu managed by UEC running over EC2 :) If you do find that cool, what about contributing back. How about you start hacking on that script to make it even more awesome, such as maybe installing over multiple nodes or whatever crazy idea you can think of! If you're interested to start hacking, drop me a hi in #ubuntu-cloud at Freenode